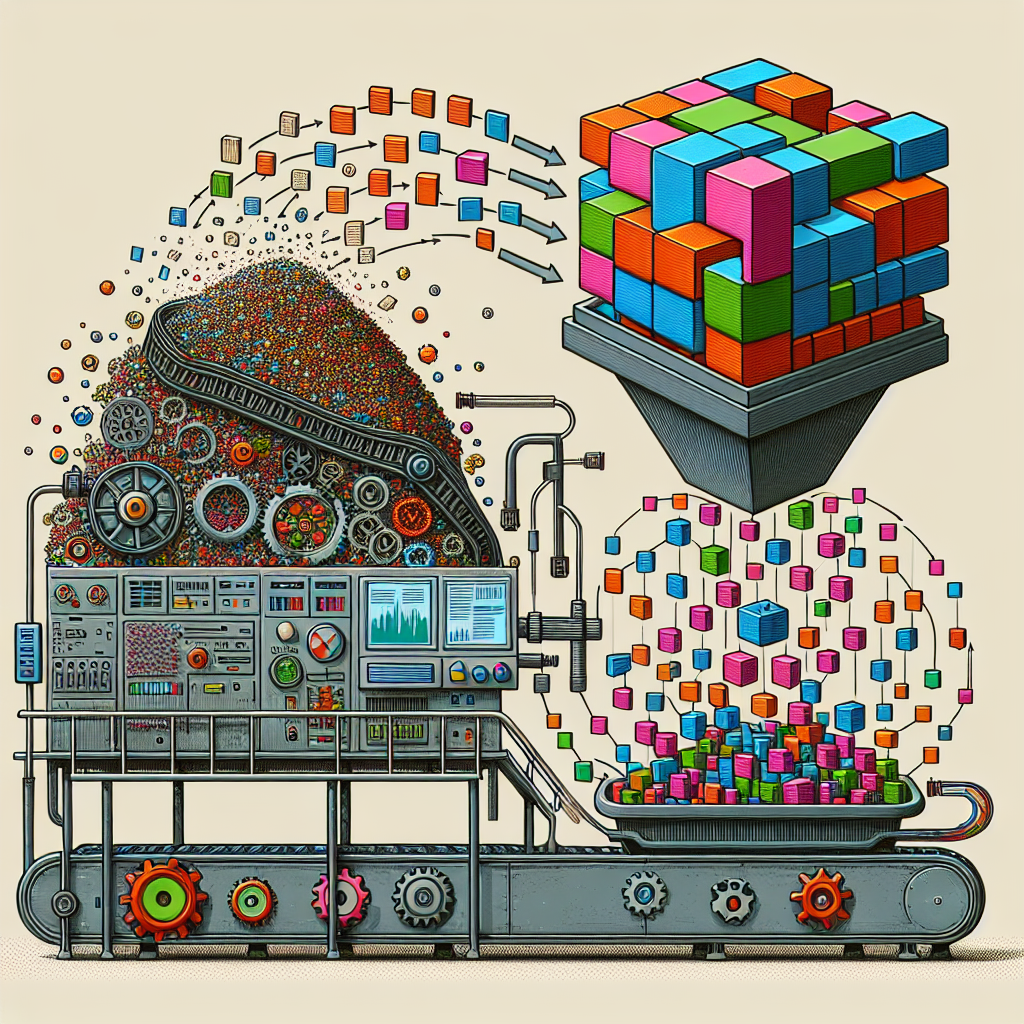

Machine learning is revolutionizing industries across the board, from healthcare to finance to marketing. However, the success of any machine learning project hinges on the quality of the data being used. DataOps, or data operations, is a crucial component of ensuring that the data used in machine learning models is clean and reliable.

High-performance computing (HPC) systems are often used to process and analyze large datasets for machine learning projects. In order to maximize the effectiveness of these systems, it is essential that the data being fed into them is of high quality.

The Importance of Data Quality

Poor quality data can lead to inaccurate predictions and unreliable results in machine learning models. In order to ensure that your machine learning project is successful, it is vital to prioritize data quality from the outset.

Strategies for Ensuring Clean and Reliable Data

- Data Cleaning: Before feeding your data into a machine learning model, it is important to clean and preprocess it. This may involve removing duplicates, handling missing values, and normalizing numerical features.

- Data Validation: Validate your data against predefined rules or constraints to ensure its accuracy and consistency. This step helps identify any anomalies or errors in the dataset.

- Data Monitoring: Implement monitoring tools to continuously track the quality of your data over time. This allows you to quickly detect any issues or discrepancies that may arise.

- Data Governance: Establish clear guidelines and processes for managing and maintaining your data throughout its lifecycle. This ensures that all stakeholders understand their roles and responsibilities when it comes to data quality.

By following these strategies for ensuring clean and reliable data, you can improve the accuracy and reliability of your machine learning models. Investing time and resources into data quality upfront will pay off in the long run by producing more accurate predictions and insights.